The comparison of kappa and PABAK with changes of the prevalence of the... | Download Scientific Diagram

PDF) The Kappa Statistic in Reliability Studies: Use, Interpretation, and Sample Size Requirements Perspective | mitz ser - Academia.edu

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

K. Gwet's Inter-Rater Reliability Blog : 2014Inter-rater reliability: Cohen kappa, Gwet AC1/AC2, Krippendorff Alpha

Testing the Difference of Correlated Agreement Coefficients for Statistical Significance - Kilem L. Gwet, 2016

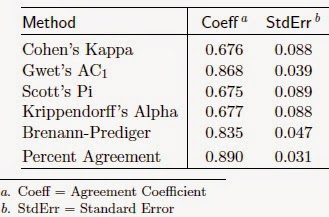

![PDF] Can One Use Cohen's Kappa to Examine Disagreement? | Semantic Scholar PDF] Can One Use Cohen's Kappa to Examine Disagreement? | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/2248247fc26c38213712c21a4a46ea47f3b4a25f/12-Table2-1.png)

![PDF] Large sample standard errors of kappa and weighted kappa. | Semantic Scholar PDF] Large sample standard errors of kappa and weighted kappa. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/f2c9636d43a08e20f5383dbf3b208bd35a9377b0/4-Table2-1.png)